Process synchronisation in operating systems refers to the coordination of multiple concurrent processes to ensure they behave correctly when accessing shared resources such as memory or files. It aims to prevent problems like race conditions, where the outcome depends on execution timing. Synchronisation mechanisms like locks, semaphores, and barriers enforce orderly access to resources, ensuring data consistency and preventing conflicts.

By synchronising processes, the operating system maintains order, fairness, and integrity, which are essential for stable and efficient multitasking environments. Process synchronisation mechanisms ensure concurrent processes interact properly by coordinating access to shared resources. They prevent issues like deadlock, where processes are indefinitely blocked because each is waiting for a resource held by another.

Additionally, synchronisation fosters cooperation between processes, enabling them to effectively communicate and coordinate their activities. Through mutual exclusion and signalling, synchronisation mechanisms establish rules for accessing critical code or data sections, maintaining system integrity, and preventing data corruption. Effective process synchronisation is crucial for modern operating systems' reliable and efficient operation, ensuring stability and optimal utilisation of system resources in multitasking environments.

Process synchronisation in operating systems refers to the management of multiple concurrent processes to ensure they coordinate their activities properly, especially when accessing shared resources like memory or files. It involves implementing mechanisms to prevent issues like race conditions, where the outcome depends on the timing of execution, and deadlock, where processes are indefinitely blocked due to resource contention.

Synchronization mechanisms like locks, semaphores, and barriers regulate access to shared resources, maintain data consistency, prevent conflicts, and enable the orderly execution of processes. Effective process synchronization is essential for stable and efficient multitasking environments. Process synchronization in operating systems is crucial for managing concurrent processes effectively. It ensures that multiple processes cooperate and coordinate their actions efficiently, especially when accessing shared resources.

By implementing synchronization mechanisms like locks, semaphores, and monitors, operating systems regulate access to critical code or data sections, preventing issues such as race conditions and deadlocks. These mechanisms enable processes to communicate, synchronize their activities, and maintain system integrity. Proper process synchronization fosters orderly execution, resource utilization, and stability in multitasking environments, which is essential for the reliable operation of modern operating systems and the applications running on them.

Consider a scenario where processes A and B must access a shared printer. They might attempt to print simultaneously without synchronisation, leading to garbled output or printer errors. The operating system employs synchronisation mechanisms such as locks or semaphores to ensure orderly access.

When Process A wants to print, it requests a lock from the operating system. If the lock is available, Process A acquires it, prints its document, and then releases it. Meanwhile, if Process B also wants to print, it must wait until Process A releases the lock before acquiring it and proceeding with printing.

By using locks, the operating system ensures that only one process can access the printer at a time, preventing conflicts and ensuring that print jobs are processed sequentially, maintaining the integrity of the printed output. This coordination is essential for the reliable and efficient operation of multitasking environments.

Process synchronization in operating systems ensures that multiple concurrent processes or threads coordinate their activities to access shared resources in a controlled and orderly manner.

This coordination is crucial to prevent data corruption, maintain consistency, and avoid race conditions in multi-tasking environments. Here’s a detailed explanation of how process synchronization works:

Critical sections are segments of code where shared resources (such as variables, data structures, or files) are accessed. To maintain data integrity, only one process should execute within a critical section at any time. Concurrent access to critical sections without synchronization can lead to unpredictable behavior and data corruption.

Operating systems provide several synchronization primitives or mechanisms that processes can use to coordinate access to shared resources. These include:

Deadlocks occur when processes are blocked indefinitely waiting for each other to release resources. Operating systems employ deadlock avoidance algorithms, such as resource allocation graphs or deadlock detection, to prevent and resolve deadlock situations.

The OS scheduler determines the order and timing of process execution, which can affect process synchronization. Schedulers may prioritize processes based on their synchronization requirements or deadlines to optimize resource utilization and responsiveness.

Consider a multi-threaded application where multiple threads access a shared buffer:

1. Mutex Lock Usage: Threads acquire a mutex lock before accessing the buffer to prevent simultaneous modifications that could corrupt data.

2. Condition Variables: Threads waiting to read from the buffer wait on a condition variable until data is available. Threads writing to the buffer signal the condition variable when data is written, allowing waiting threads to proceed.

3. Semaphore Usage: A semaphore controls access to the buffer, ensuring that only a limited number of threads can read or write at any given time.

In summary, process synchronization mechanisms provided by operating systems ensure orderly access to shared resources, prevent data corruption, and maintain system reliability in multi-tasking environments.

Choosing the appropriate synchronization mechanism depends on the specific requirements of the application, including performance considerations, complexity of synchronization patterns, and the nature of shared resources being accessed.

Process synchronisation in operating systems refers to the coordination of multiple concurrent processes to ensure they behave correctly when accessing shared resources like memory, files, or hardware devices. It aims to prevent problems such as race conditions, where the outcome depends on execution timing, and deadlocks, where processes are indefinitely blocked due to resource contention.

Various synchronisation mechanisms like locks, semaphores, and monitors enforce orderly access to resources, ensuring data consistency and preventing conflicts. Effective process synchronisation is crucial for stable and efficient multitasking environments, ensuring that processes cooperate, communicate, and coordinate their activities effectively.

Mutex is a synchronisation primitive ensuring that only one process can access a shared resource. A mutex (short for mutual exclusion) is a synchronization mechanism used to control access to a shared resource by multiple threads or processes in concurrent programming. It ensures that only one thread can access the resource at a time, preventing simultaneous modifications that could lead to data inconsistency or race conditions.

Threads attempting to access the resource acquire the mutex, execute their critical section (the part of code accessing the shared resource), and then release the mutex, allowing other threads to proceed. This prevents conflicts and maintains data integrity in multi-threaded or multi-process environments.

Example: Consider a scenario where multiple processes must access a shared database. A mutex can control access to the database, allowing only one process to execute database operations at a time. Process A acquires the mutex before accessing the database, while other processes wait until the mutex is released.

Semaphores are synchronisation objects with an integer value that controls access to shared resources. They can be used to limit the number of concurrent accesses to resources. It maintains a counter that tracks the number of resources available. Processes or threads can acquire (decrement) or release (increment) the semaphore, ensuring that a specified number of resources are not exceeded.

This mechanism allows for coordination in scenarios like producer-consumer problems or limiting concurrent access to a critical section of code. Semaphores can also be used to implement other synchronization patterns by adjusting their initial values and operations, providing a flexible tool for managing resource access in multi-threaded environments.

Example: In a producer-consumer scenario, semaphores can regulate access to a shared buffer. If the buffer is complete, the producer process waits (decrements the semaphore value); if it's empty, the consumer process waits. Semaphores ensure that producers and consumers don't access the buffer simultaneously, preventing data corruption.

Monitors are high-level synchronisation constructs that encapsulate shared data and procedures. They encapsulate both data and procedures (methods or functions) that operate on that data within a single logical unit. Monitors allow safe concurrent access to shared resources by ensuring that only one thread can execute within the monitor at any given time. Other threads must wait until the executing thread exits the monitor.

This approach simplifies synchronization compared to low-level primitives like locks or semaphores, as the monitor's structure inherently prevents data races and ensures orderly access to shared resources.

Example: Consider a shared queue accessed by multiple producer and consumer processes. A monitor can encapsulate the queue data structure along with procedures to add and remove items. Processes must acquire access to the monitor before accessing the queue, ensuring mutual exclusion.

Condition variables are synchronisation primitives used with locks to enable processes to wait for a specific condition to become true before proceeding.Condition variables are synchronization primitives used in conjunction with locks or monitors to enable threads to wait until a particular condition on shared data becomes true before proceeding.

They provide a way for threads to suspend execution (wait) until signaled (notify) by another thread that the condition they are waiting for has been met. Condition variables help avoid busy-waiting, where a thread continuously polls for a condition to be true, which is inefficient and wastes CPU resources.

Example: In a multithreaded application, a pool of worker threads may wait for tasks to become available in a shared task queue. Condition variables can signal to wait threads when a new task is added to the queue, allowing them to wake up and process it.

Readers-writers locks allow multiple readers simultaneous access to a shared resource but restrict write access to only one writer at a time.Readers-Writers locks (RW locks) are synchronization primitives designed for scenarios where multiple threads need simultaneous access to shared data.

They manage access to a shared resource that can be read by multiple threads concurrently but requires exclusive access for writing to maintain data integrity.

Example: In a document editing application, multiple users may read a document simultaneously (reader access), but only one user can edit the document simultaneously (writer access). Readers-writers lock to ensure that reads can occur concurrently while maintaining exclusive access during writes.

Spinlocks are locks that repeatedly poll in a tight loop until they can acquire the lock. They are suitable for use in environments where waiting is expected to be brief. A spinlock is a synchronization primitive used in concurrent programming where a thread continuously waits ("spins") in a loop while repeatedly checking if a lock is available.

It differs from traditional locks like mutexes or semaphores in that it does not put the waiting thread to sleep when the lock is not available; instead, it actively waits by executing a tight loop until the lock becomes free.

Example: A spinlock may protect a critical code section in real-time systems with low-latency requirements. If the lock is held, the spinning process continuously checks if the lock becomes available, avoiding the overhead of context switches associated with traditional blocking locks.

These process synchronization mechanisms provide different strategies for managing concurrent access to shared resources in operating systems, each suitable for different synchronization requirements and scenarios.

Each feature of process synchronization in operating systems plays a crucial role in ensuring the smooth and efficient operation of concurrent processes. Mutual exclusion prevents conflicts by allowing only one process to access a resource at a time, while deadlock avoidance mechanisms prevent processes from getting stuck due to circular dependencies.

Semaphore management enables coordinated access to shared resources, and atomic operations ensure data integrity in concurrent environments. Inter-process communication facilitates collaboration while scheduling policies to optimize resource utilization.

Mutual exclusion ensures that only one process accesses a shared resource at any given time, preventing conflicts and preserving data integrity.

It's a fundamental concept in process synchronization, crucial for maintaining consistency in multitasking environments. Ensuring only one process accesses a shared resource at a time is vital for preventing conflicts and maintaining data integrity in multitasking environments.

Deadlock avoidance mechanisms prevent processes from entering deadlock states where they're indefinitely blocked due to circular dependencies on resources. These mechanisms ensure continuous system operation and prevent resource starvation by detecting and resolving potential deadlock situations.

Prevents processes from being indefinitely blocked due to circular dependencies on resources, ensuring continuous system operation and preventing resource starvation.

Semaphores are synchronization primitives used to control access to shared resources. Effective semaphore management enables multiple processes to coordinate actions, preventing race conditions and ensuring orderly resource access.

Controls access to shared resources using semaphores, enabling effective coordination among multiple processes and preventing race conditions.

Atomic operations ensure that certain critical operations are executed indivisibly, preventing data corruption that could arise from interruptions in concurrent environments.

These operations guarantee consistency and integrity when accessing shared resources. Guarantees indivisible execution of certain operations, preventing data corruption and ensuring consistency in concurrent environments.

Inter-process communication mechanisms facilitate the exchange of data and signals between processes, enabling collaboration and coordination in distributed systems.

These mechanisms play a crucial role in synchronizing the activities of concurrent processes. Facilitates data and signal exchange between processes, enabling collaboration and coordination in distributed systems.

Scheduling policies prioritize processes based on their synchronization needs or deadlines, optimizing resource utilization and system responsiveness.

These policies ensure efficient allocation of CPU time and prevent resource contention among processes. Prioritises processes based on synchronization requirements or deadlines, optimizing resource utilization and system responsiveness.

Resource allocation mechanisms manage the distribution of resources among processes to prevent contention and ensure fair access.

By effectively managing resources, these mechanisms maintain system stability and support equitable sharing of resources. Manages resource distribution to prevent contention and ensure equitable access, maintaining system stability and fairness among processes.

Error handling strategies in process synchronization mechanisms manage exceptional conditions, ensuring robustness and reliability.

These strategies detect and handle errors to maintain system stability and prevent disruptions in synchronization operations. Implements strategies to manage exceptional conditions, ensuring robustness and reliability in process synchronization mechanisms.

Performance optimization techniques minimize synchronization overhead and latency, enhancing system efficiency and responsiveness.

By optimizing resource access and synchronization mechanisms, these techniques improve overall system performance and user experience. Optimises synchronization overhead and minimizes latency, enhancing system efficiency and responsiveness in accessing shared resources.

Process synchronization is a crucial concept in operating systems. It ensures that multiple processes or threads can coordinate their activities effectively and avoid conflicts when accessing shared resources such as data, files, or hardware devices.

It involves implementing mechanisms that allow processes to communicate, coordinate, and synchronize their execution to maintain data consistency and prevent race conditions.

Process management involves creating, scheduling, and terminating processes in an operating system, ensuring efficient resource utilization and system stability. Synchronization, on the other hand, focuses on coordinating the activities of concurrent processes, particularly when accessing shared resources, to prevent conflicts and maintain data integrity.

Together, process management and synchronization form the backbone of multitasking environments. Process management oversees the lifecycle of processes, including creation, scheduling, and termination, while synchronization mechanisms regulate access to shared resources to prevent race conditions, deadlocks, and other synchronization issues.

By effectively managing processes and synchronizing their activities, operating systems can ensure smooth and orderly execution of tasks, optimize resource utilization, and maintain system stability in multitasking environments.

A race condition is a situation that occurs in concurrent programming when the outcome of a program depends on the relative timing of events or operations. It arises when multiple processes or threads access shared resources or execute critical sections of code concurrently, and the outcome depends on the interleaving or ordering of their execution.

In a race condition, the correct behavior of the program depends on the precise sequence of events, which can be unpredictable and non-deterministic. This can lead to unexpected and erroneous results, including data corruption, inconsistent state, or program crashes.

Race conditions are often unintended and challenging to detect and debug because they depend on subtle timing issues that may vary each time the program runs. They are a common source of bugs in concurrent programs and require careful synchronization mechanisms, such as locks or semaphores, to prevent them.

Let's consider a simple example involving two threads that are incrementing a shared counter variable.

1. Initial Setup:

2. Race Condition Scenario:

3. Unexpected Outcome:

4. Illustration:

Race conditions typically occur due to the following reasons.

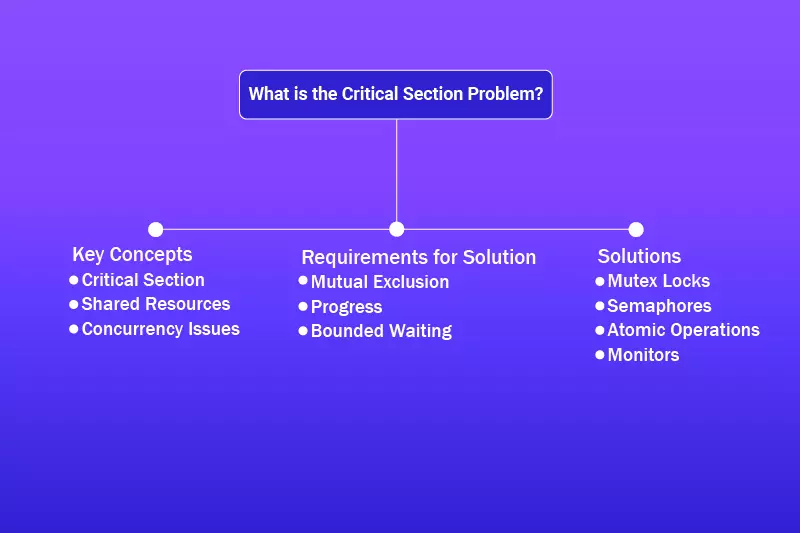

The Critical Section Problem is a fundamental challenge in concurrent programming where multiple processes or threads need to access shared resources or critical sections of code. Still, their simultaneous access can lead to data inconsistency or system crashes.

The goal is to ensure that only one process can execute within a critical section at any given time, preventing conflicts and maintaining data integrity. Here's a detailed explanation of the Critical Section Problem:

1. Critical Section

2. Shared Resources

3. Concurrency Issues

To solve the Critical Section Problem, the solution must satisfy the following three requirements:

1. Mutual Exclusion

2. Progress

3. Bounded Waiting

Several synchronization mechanisms can be used to address the Critical Section Problem, including:

Mutual Exclusion: Only one process may be in the critical section at any time.

1. Progress: If no process is in its critical section and some processes wish to enter their critical sections, only those processes not executing in their remainder section can decide which will enter its critical section next, and this selection cannot be postponed indefinitely.

2. Bounded Waiting: A bound must exist on the number of times that other processes are allowed to enter their critical sections after a process has made a request to enter its critical section and before that request is granted.

One classical solution to the critical section problem involves using semaphores, which are synchronization primitives that provide mechanisms for process synchronization and mutual exclusion.

Let's outline the solution using semaphores:

Initialization:

semaphore mutex = 1; // Semaphore to ensure mutual exclusion

semaphore turn = 0; // Semaphore to handle turn-taking

Process Code: Each process follows this template:

do {

// Entry Section

wait(turn);

wait(mutex);

// Critical Section

// Access shared resources here

signal(mutex);

// Remainder Section

signal(turn);

// Non-critical section

} while (true);

Explanation:

This solution satisfies the requirements of the critical section problem by ensuring mutual exclusion (only one process in the critical section at a time), progress (processes take turns somewhat), and bounded waiting (due to the turn-taking mechanism).

These solutions address the Critical Section Problem by providing mechanisms for mutual exclusion, progress, and bounded waiting, ensuring that processes can safely access and manipulate shared resources in concurrent environments. The solution choice depends on the programming model, system requirements, and performance considerations.

Synchronization lies at the heart of efficient and reliable operation in modern operating systems. It ensures that concurrent processes or threads can collaborate harmoniously, accessing shared resources without conflicts that could lead to data corruption or system instability.

By enforcing resource access and coordination rules, synchronization mechanisms prevent race conditions, maintain data integrity, and facilitate effective communication between processes.

Synchronization in operating systems is driven by several fundamental requirements to ensure effective and orderly process management. First and foremost, it is crucial to enforce mutual exclusion, which guarantees that only one process at a time can access a shared resource, preventing concurrent modifications that could lead to inconsistencies or errors.

The "Peterson's Solution" is a classic algorithm for solving the Critical Section Problem, named after its creator, Gary L. Peterson. It provides a simple and efficient mechanism for achieving mutual exclusion between two processes. The solution is typically implemented using shared variables and requires processes to alternate between entering their critical sections.

The basic idea of Peterson's Solution involves two shared variables: flag[] and turn. The flag[] array indicates whether a process wants to enter its critical section, while the turn variable determines whose turn it is to enter the critical section. The solution works as follows:

1. Each process sets its flag[] to indicate its desire to enter the critical section.

2. The process sets turn to the index of the other process, indicating that it is the other process's turn to enter the critical section.

3. The process enters a loop, checking if the other process's flag[] is set and if it is the other process's turn. If both conditions are met, the process waits until the other process has finished its critical section.

4. Once the other process has exited its critical section, it resets its flag[] to indicate that it no longer wants to enter the critical section and proceeds to enter its critical section.

By alternating between setting flag[] and turn, Peterson's Solution ensures mutual exclusion between the two processes, as only one process can be in its critical section at a time.

1. Mutual Exclusion:

2. Assumptions:

3. Algorithm Components:

Solution Outline

flag[2] = {false, false}; // Initially, neither process wants to enter the critical section

turn = 0; // Arbitrarily choose one process to start (can be 0 or 1)

Process P0:

do {

flag[0] = true; // P0 wants to enter the critical section

turn = 1; // Pass the turn to P1

while (flag[1] && turn == 1); // Wait while P1 wants to enter and it's P1's turn

// Critical Section

// Access shared resources

flag[0] = false; // Exit the critical section

// Remainder Section

// Non-critical code

} while (true);

Process P1:

do {

flag[1] = true; // P1 wants to enter the critical section

turn = 0; // Pass the turn to P0

while (flag[0] && turn == 0); // Wait while P0 wants to enter and it's P0's turn

// Critical Section

// Access shared resources

flag[1] = false; // Exit the critical section

// Remainder Section

// Non-critical code

} while (true);

Entry Section:

Waiting Loop:

Critical Section:

Exit Section:

Remainder Section:

Peterson's solution is elegant due to its simplicity and effectiveness in achieving mutual exclusion between two processes. However, it is specific to scenarios involving exactly two processes and may not be directly applicable to systems with more than two processes without modification or combination with other synchronization mechanisms.

Synchronization hardware refers to specialized components or features built into computer systems to facilitate synchronization and coordination between concurrent processes or threads.

These hardware mechanisms aim to improve synchronisation operations' efficiency, performance, and scalability in multitasking environments. Some common examples of synchronization hardware include:

These hardware features complement software-based synchronization mechanisms (e.g., locks, semaphores, and barriers) and help improve concurrent programs' performance, scalability, and reliability in modern computing systems.

Process synchronization in operating systems offers several advantages, crucial for ensuring efficient and reliable operation of concurrent processes. Here are the main advantages.

Synchronization is a fundamental concept in operating systems and concurrent programming, essential for ensuring the correct and efficient execution of concurrent processes or threads. By coordinating the activities of multiple processes, synchronization mechanisms prevent race conditions, data corruption, deadlocks, and other synchronization issues, ensuring system stability and reliability. Various synchronization techniques, such as locks, semaphores, monitors, and atomic operations, solve the Critical Section Problem and facilitate mutual exclusion, progress, and bounded waiting.

Additionally, synchronization hardware features, including atomic instructions, memory barriers, cache coherence protocols, and transactional memory, enhance the efficiency and scalability of synchronization operations in modern computing systems. Synchronisation is crucial in enabling multitasking environments and supporting inter-process communication, resource management, and concurrency control. By enforcing synchronization rules and ensuring orderly access to shared resources, synchronization mechanisms contribute to the smooth and reliable operation of operating systems and parallel computing applications, facilitating the development of robust and responsive software systems.

Copy and paste below code to page Head section

Process synchronization is a mechanism used in operating systems to coordinate the activities of concurrent processes or threads that share resources, ensuring proper sequencing, mutual exclusion, and data consistency.

Process synchronisation is essential for preventing race conditions, data corruption, deadlocks, and other synchronization issues in concurrent programs, ensuring system stability, reliability, and correctness.

Common synchronization mechanisms include locks, semaphores, monitors, barriers, atomic operations, and transactional memory. These mechanisms solve the Critical Section Problem and facilitate mutual exclusion, progress, and bounded waiting.

Deadlock occurs when two or more processes are indefinitely blocked because each is waiting for a resource held by the other, resulting in a cyclic dependency. Deadlock prevention and avoidance techniques, such as resource ordering and deadlock detection algorithms, are used to mitigate this issue.

A race condition is a situation in concurrent programming where the outcome of a program depends on the relative timing of events or operations. It occurs when multiple processes or threads access shared resources without proper synchronization, leading to an unpredictable and potentially erroneous behavior.

Mutual exclusion ensures that only one process or thread can access a shared resource at any given time, preventing concurrent access and maintaining data integrity. It is a fundamental concept in process synchronization and is typically enforced using locks or semaphores.