Data science and big data are two closely related fields that are often used interchangeably but have distinct differences in their scope and application. Data science is a multidisciplinary field that combines statistics, computer science, and domain expertise to extract meaningful insights from structured and unstructured data. It involves analyzing, interpreting, and visualizing data to inform business decisions and solve complex problems.

Data scientists use tools like machine learning algorithms and data modeling to create predictive models and actionable insights. Big data, on the other hand, refers to extremely large datasets that cannot be processed or analyzed using traditional data processing methods due to their sheer size, complexity, or velocity. Big data involves handling vast amounts of data generated from various sources such as social media, IoT devices, and online transactions.

The goal of big data is to collect, store, and process this information efficiently, often using distributed systems and technologies like Hadoop, Spark, and NoSQL databases. While data science focuses on deriving insights and predictions from data, big data focuses on managing and processing large volumes of data. In essence, big data is the infrastructure and dataset that data scientists work with. Both fields are critical in today’s data-driven world, but they serve different roles in turning raw data into valuable information.

Big data refers to extremely large datasets that are so complex and voluminous that traditional data processing methods and tools are insufficient to handle them. These datasets can come from various sources, including social media platforms, IoT devices, transaction records, and more.

The main challenge of big data lies in its three primary characteristics: volume (the sheer amount of data), velocity (the speed at which data is generated and processed), and variety (the diverse types of data, including structured and unstructured data). To manage and analyze big data, specialized technologies and frameworks such as Hadoop, Apache Spark, and NoSQL databases are employed.

These tools allow businesses and organizations to store, process, and analyze vast quantities of data efficiently. Big data analytics helps uncover hidden patterns, correlations, and trends that can significantly impact decision-making, offering valuable insights into customer behavior, market trends, and operational efficiencies.

Data science is a multidisciplinary field that combines elements of statistics, computer science, and domain expertise to extract meaningful insights from data. The primary goal of data science is to analyze and interpret complex data to inform decision-making and solve problems.

Data scientists employ a range of techniques, including machine learning, artificial intelligence, and predictive analytics, to create models that can predict future trends, automate processes, or optimize systems. Data science is applied across various sectors, including healthcare, finance, marketing, and technology, where it plays a crucial role in transforming data into actionable insights.

Data scientists work with both small and large datasets, depending on the problem at hand, and they focus on cleaning, structuring, and visualizing data to make it more understandable for decision-makers. Unlike big data, which deals with massive datasets, data science is more concerned with deriving value from data, regardless of its size.

Big data and data science are two closely related fields, but they serve different purposes in the data-driven world. While both deal with data, big data focuses on managing and processing massive datasets, whereas data science is concerned with extracting valuable insights from data.

Big data uses specialized tools and technologies to handle large-scale data, whereas data science uses algorithms and statistical models to analyze and interpret data. The key differences between these fields highlight how each contributes to turning data into valuable knowledge. Understanding these differences is essential for businesses to apply both disciplines in their decision-making processes effectively.

Big data and data science work hand-in-hand to provide organizations with valuable insights that drive decision-making and innovation. Big data provides the vast amounts of data that organizations need to analyze in order to identify trends, patterns, and correlations. However, the sheer volume and complexity of big data make it easier to manage and analyze with the right tools. This is where data science comes into play.

Data science applies advanced algorithms, machine learning techniques, and statistical models to analyze these large datasets, transforming raw data into actionable insights. For instance, while big data provides the foundation, data science unlocks the hidden potential within that data by making sense of it through sophisticated analytical methods. Together, these fields can address complex challenges across various industries.

For example, in healthcare, big data is used to gather large amounts of patient data from multiple sources, such as medical records, wearables, and sensor devices. Data science then analyzes this data to predict health outcomes, recommend treatments, and improve patient care. In e-commerce, big data collects user behavior data, and data science is used to create personalized shopping experiences and optimize inventory management. As a result, big data and data science form a powerful synergy that enables organizations to harness the full potential of data for strategic decision-making and operational efficiency.

Data scientists are critical for turning large sets of raw data into meaningful insights. They employ statistical techniques, machine learning, and data mining to analyze complex datasets and identify trends, patterns, and correlations.

Their goal is to convert data into actionable information that organizations can use for strategic decision-making. The role of a data scientist is cross-functional, requiring close collaboration with other departments to align their analysis with business goals. Their responsibilities extend beyond simply analyzing data.

Data scientists also design and build predictive models, conduct in-depth analyses, and communicate their findings in a way that is easily understandable for business leaders. They must ensure the models they create are robust, accurate, and useful for the organization. Data scientists bridge the gap between raw data and the strategies that can drive organizational success.

Data science is a driving force behind modern business innovation, as it allows organizations to analyze and derive actionable insights from vast amounts of data. By utilizing machine learning algorithms, artificial intelligence (AI), and statistical techniques, data science empowers businesses to make data-driven decisions. This helps businesses improve efficiency, reduce costs, and enhance customer satisfaction by offering products and services tailored to individual needs.

As the demand for skilled data scientists rises, companies recognize the importance of leveraging data to maintain a competitive edge in today's fast-paced market. The benefits of data science extend across industries, from retail and finance to healthcare and technology.

By analyzing patterns, predicting trends, and uncovering hidden insights in data, businesses can enhance their decision-making capabilities. Moreover, data science supports innovation by enabling companies to explore new opportunities and drive growth. With its ability to transform raw data into valuable insights, data science is an essential tool for businesses aiming to stay ahead of the curve in a data-driven world.

While data science offers numerous benefits, there are several challenges and disadvantages associated with its implementation. These challenges can impact the effectiveness and efficiency of data-driven decision-making. Data science requires access to large datasets, sophisticated algorithms, and skilled professionals to extract meaningful insights.

Additionally, privacy concerns, data quality, and ethical issues may arise, which need to be addressed for data science to be applied effectively across industries. In this rapidly evolving field, businesses also need help in keeping up with the continuous advancements in technology. Data science can be complex, requiring a deep understanding of data processing, machine learning models, and statistical analysis.

Furthermore, as organizations increasingly rely on data science, there may be issues related to data bias, data security, and a need for more skilled professionals to manage the processes. In this section, we will explore the disadvantages of data science in greater detail.

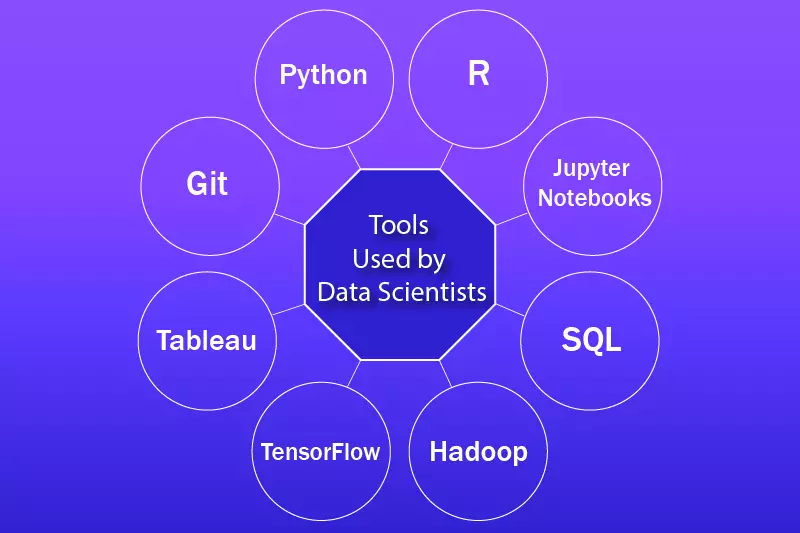

Data scientists rely on a variety of tools to process, analyze, and visualize data. These tools enable them to perform complex analyses, build predictive models, and derive actionable insights from large datasets.

The tools used by data scientists span a wide range of categories, including programming languages, data visualization platforms, and machine learning libraries. Some of these tools are open-source, while others are proprietary, and the choice of tools depends on the specific needs of a project or organization. In today’s data-driven world, the selection of the right tools is crucial for ensuring that data science projects are efficient and successful.

These tools help automate data processing tasks, improve model accuracy, and facilitate better decision-making. Below, we explore the key tools used by data scientists to navigate the complex landscape of data analysis.

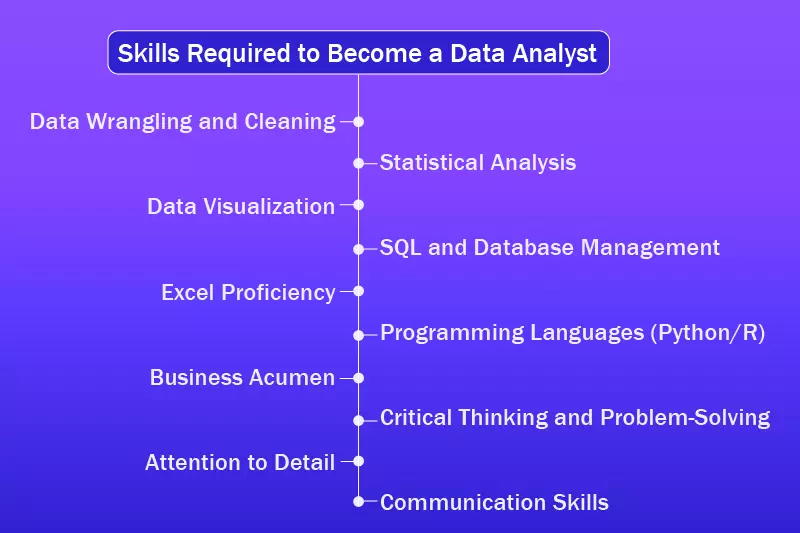

Data analysts play a crucial role in transforming raw data into actionable insights for organizations. They gather, clean, and analyze data to uncover trends, patterns, and relationships, helping businesses make informed decisions. The skills required to become a data analyst span across multiple disciplines, including statistics, programming, and data visualization.

Data analysts must be comfortable with various tools and technologies and have a strong understanding of business needs. In this competitive field, developing expertise in key areas can help you stand out and succeed.

A data analyst’s skill set also includes the ability to work with large datasets, extract relevant insights, and present findings clearly and concisely. They must have excellent problem-solving abilities, critical thinking skills, and a strong attention to detail. Below are the essential skills required to become a proficient data analyst.

Data wrangling involves cleaning and preparing raw data for analysis, ensuring it’s usable and reliable. This is a critical skill for data analysts, as raw data often contains errors or inconsistencies. Data analysts must use various tools, such as Python's Pandas or R’s dplyr, to clean and transform data into a structured format. Efficient data wrangling allows analysts to focus on analysis rather than spending time fixing errors.

Once the data is cleaned, analysts can perform their analysis with confidence. This step involves handling missing values, correcting outliers, and ensuring the data follows a consistent format. The better the cleaning process, the more reliable the analysis will be, and the insights generated from that data will be more accurate. Effective data wrangling results in high-quality data, leading to more meaningful and actionable insights.

Statistical analysis is a core skill for any data analyst. Understanding fundamental statistical concepts such as mean, median, variance, and standard deviation is essential for drawing meaningful conclusions from data. Analysts use these concepts to summarize large datasets and understand their underlying trends. They may also apply more advanced statistical techniques such as regression analysis, hypothesis testing, and correlation to determine relationships within the data.

Statistical tools such as SPSS, R, and Python’s SciPy library are commonly used for performing these analyses. These tools allow analysts to test assumptions and verify the validity of their results. Strong statistical skills enable data analysts to make data-driven decisions with confidence, ensuring that business strategies are based on robust, quantitative evidence.

Data visualization is an essential skill for a data analyst, as it helps present complex data clearly and engagingly. Tools like Tableau, Power BI, and Python libraries like Matplotlib and Seaborn allow analysts to create charts, graphs, and dashboards. Visualization not only makes it easier to identify patterns but also helps communicate findings to stakeholders who may need to become more familiar with raw data.

An important aspect of data visualization is selecting the right type of chart or graph to represent specific insights. For instance, bar charts are effective for comparing quantities, while scatter plots help identify correlations between variables. Effective visualizations help decision-makers understand the data quickly, making it easier for them to take action based on the insights provided by the analyst.

Proficiency in SQL is fundamental for data analysts. SQL allows analysts to query relational databases, retrieve relevant data, and join multiple datasets to generate insights. A solid understanding of SQL helps analysts efficiently interact with databases, create custom reports, and filter large amounts of data. SQL also allows analysts to automate repetitive tasks and queries, making data retrieval much faster.

Database management goes beyond querying. Data analysts also need to understand how to structure databases, normalize data, and ensure that the data is stored optimally. Knowing how to work with databases like MySQL, PostgreSQL, and SQL Server is crucial for managing and organizing large datasets. Efficient database management ensures that analysts can access and manipulate data without delays, leading to quicker insights.

Excel is still one of the most widely used tools for data analysis, and a data analyst should be proficient in its advanced features. Analysts use Excel to manipulate datasets, perform calculations, and generate visualizations. Functions like VLOOKUP, pivot tables, and advanced formulas are essential for working with data efficiently. Excel is often the first tool analysts use for exploratory data analysis due to its user-friendly interface and flexibility.

While Excel is useful for smaller datasets, it can become cumbersome for larger datasets. However, Excel still provides powerful features for data manipulation and analysis. Analysts use Excel to organize and visualize data straightforwardly, allowing them to create reports and dashboards that help communicate findings clearly to stakeholders.

Proficiency in programming languages like Python or R is becoming increasingly essential for data analysts. These languages offer extensive libraries and packages for data manipulation, analysis, and visualization. Python’s libraries, such as Pandas, NumPy, and Matplotlib, are widely used for handling large datasets, performing statistical analysis, and creating visualizations. R, on the other hand, is particularly strong in statistical modeling and data visualization.

Programming skills allow data analysts to automate repetitive tasks, process data at scale, and implement machine learning models. Python and R are both open-source and have large communities, which means data analysts can leverage resources, tutorials, and code examples from these communities to enhance their skills. The ability to program enables analysts to handle complex datasets more effectively.

Business acumen is a critical skill for data analysts, as it enables them to understand the context behind the data they are working with. Analysts must align their work with the organization’s business objectives and ensure that their findings are relevant and actionable. This requires a deep understanding of the company’s goals, industry trends, and challenges, as well as the specific needs of different departments.

Business acumen helps data analysts communicate their findings in a way that resonates with decision-makers. Analysts need to identify business problems and leverage data to find solutions that directly impact the bottom line. This skill ensures that their analyses contribute to improving business performance and supporting strategic decisions.

Critical thinking is essential for data analysts to approach data objectively and uncover hidden patterns. Analysts need to ask the right questions, challenge assumptions, and think analytically to identify trends and draw conclusions. Strong problem-solving skills allow them to break down complex business problems and find innovative solutions by applying data-driven insights.

Data analysts must also evaluate the quality of the data they work with. This involves identifying potential biases or errors in the data and ensuring that the analysis is reliable. Effective critical thinking helps analysts provide accurate and relevant insights, leading to better decision-making across the organization.

Attention to detail is crucial for a data analyst, as even small errors in data can have significant implications for the analysis. Analysts need to ensure that the data is accurate, clean, and properly formatted. They should be meticulous in checking for inconsistencies, outliers, or missing data that could affect the reliability of the results.

Additionally, analysts must ensure that their visualizations and reports are clear and error-free. A minor mistake in a chart or a miscalculation can mislead stakeholders and lead to incorrect conclusions. Attention to detail ensures that the final output is of high quality and ready for presentation to the team or leadership.

Data analysts must have strong communication skills to convey their findings to stakeholders effectively. This includes the ability to explain complex data and analytical concepts in simple terms. Analysts should also be able to create reports, presentations, and dashboards that summarize key insights and recommendations clearly and concisely.

Good communication skills allow data analysts to collaborate effectively with other teams, ensuring that their insights are understood and acted upon. Whether presenting to executives or working with technical teams, clear communication is essential for driving business decisions based on data analysis.

Data science is revolutionizing various industries by enabling businesses to leverage data for actionable insights, predictive models, and better decision-making. By combining statistical techniques, machine learning, and data analysis, data science helps organizations optimize operations, enhance customer experiences, and unlock new business opportunities.

Whether in healthcare, finance, or marketing, data science's applications extend across a wide range of fields, driving innovation and efficiency. Companies rely on data science to process large amounts of data, make predictions, and automate tasks, ultimately enabling smarter, data-driven business strategies. Here are some key applications of data science that have significantly impacted industries worldwide.

Data science is transforming healthcare by enabling more accurate diagnostics, personalized treatment, and better resource management. With the help of predictive models, healthcare professionals can forecast disease outbreaks, predict patient outcomes, and recommend the most effective treatments. In medical research, data science is used to analyze large datasets, accelerating drug discovery and understanding complex diseases. Machine learning algorithms also help in the development of AI-driven diagnostic tools that assist doctors in detecting diseases earlier.

Furthermore, by analyzing patient data, data science allows for personalized medicine, where treatments are tailored to the individual's genetic makeup and lifestyle. This results in more effective therapies and improved patient outcomes. Hospitals also use data science to optimize operational efficiency, improve scheduling, and reduce costs while ensuring high-quality care.

Data science plays a vital role in the financial industry, from fraud detection to risk management and investment analysis. By applying machine learning algorithms to large volumes of transaction data, banks can identify suspicious activities and detect fraudulent transactions in real time. Data science also enables financial institutions to assess risk, predict market trends, and develop more accurate financial models.

Risk management is another area where data science makes a significant impact. By analyzing historical data and real-time information, financial institutions can better predict and manage potential risks, such as credit defaults, market volatility, and operational inefficiencies. Financial analysts also use data science tools to create personalized investment portfolios and optimize asset allocation, enhancing the return on investment for clients.

In marketing, data science enables businesses to analyze customer behavior, segment markets, and personalize offers to target specific consumer groups. By analyzing large datasets from social media, transaction histories, and customer interactions, companies can uncover patterns and trends that drive customer preferences. This data-driven approach helps businesses create more effective marketing strategies and deliver personalized experiences.

Customer insights generated through data science allow companies to improve their products, adjust marketing campaigns, and forecast future demand. Companies also use machine learning models to predict customer churn and enhance customer retention strategies, improving long-term profitability.

Data science is widely used to streamline supply chains by improving inventory management, demand forecasting, and logistics. Machine learning models are used to analyze past sales data, predict demand fluctuations, and optimize inventory levels to reduce waste and stockouts. This helps businesses maintain the right balance between supply and demand, ensuring the efficient flow of goods and services.

In addition, data science is applied to optimize transportation routes, reduce shipping costs, and improve delivery efficiency. By analyzing real-time traffic and weather data, logistics companies can make better routing decisions, minimizing delays and improving delivery times. Predictive analytics can also help businesses anticipate disruptions in the supply chain, allowing them to manage risks proactively.

Data science is increasingly used in sports analytics to improve team performance, predict outcomes, and enhance fan engagement. Teams use data science to analyze player performance, injury risks, and game strategies. By examining player statistics, historical performance, and biomechanical data, coaches can make data-driven decisions on player selection, game tactics, and training regimens.

In addition to performance analysis, data science helps teams predict game outcomes using advanced machine learning models. Sports franchises also leverage data science to improve fan engagement by personalizing marketing campaigns, predicting fan preferences, and optimizing ticket pricing. Furthermore, data science helps in monitoring player health and predicting potential injuries, ensuring a longer and healthier career for athletes.

Data science plays a critical role in e-commerce and retail by enhancing the shopping experience, improving inventory management, and optimizing pricing strategies. Online retailers use machine learning algorithms to personalize product recommendations based on customer preferences, browsing history, and purchase behavior. This increases customer engagement and drives sales.

Data science also helps retailers analyze purchasing trends, predict demand, and optimize stock levels. Predictive analytics enables businesses to forecast sales and adjust inventory accordingly, reducing overstocking and understocking. Retailers also use data science to implement dynamic pricing strategies that adjust in real time based on market conditions, demand, and competitor pricing.

The energy sector leverages data science for demand forecasting, predictive maintenance, and efficiency optimization. By analyzing consumption patterns, energy providers can predict peak demand periods and optimize energy distribution, reducing waste and ensuring a stable energy supply. Data science also plays a crucial role in the integration of renewable energy sources by forecasting energy production and consumption patterns.

In addition, predictive maintenance models are used to monitor equipment health and identify potential failures before they occur. This helps reduce downtime, extend equipment lifespan, and lower maintenance costs. Data science also supports energy companies in developing more sustainable practices by analyzing energy usage trends and recommending energy-saving measures to consumers.

Governments use data science for a wide range of applications, from improving public services to crafting better public policies. By analyzing large datasets, governments can identify trends in crime, health, and education, enabling them to make data-driven decisions that improve citizens' lives. For example, predictive models can forecast traffic patterns, helping cities optimize road infrastructure and reduce congestion.

Data science also aids in improving public health initiatives by analyzing disease outbreaks, vaccination rates, and health risks in specific communities. Policymakers use data science to craft effective regulations, measure the impact of social programs, and ensure that resources are allocated efficiently. Moreover, data science supports crime prediction and prevention strategies, enabling law enforcement to allocate resources effectively.

Data science is a key component in the development of autonomous vehicles, enabling real-time decision-making and navigation. Machine learning algorithms analyze sensor data from cameras, radar, and LiDAR systems to make decisions regarding driving patterns, obstacle detection, and route optimization. Autonomous vehicles rely on vast amounts of data to improve safety, efficiency, and overall driving experience.

In addition, data science plays a crucial role in transportation logistics by optimizing traffic flow, public transportation schedules, and predictive maintenance for vehicles. Cities use data science to manage transportation infrastructure, reducing congestion and improving travel times. Predictive analytics also aids in route planning, reducing fuel consumption and costs.

Data science has transformed the education sector by enabling personalized learning and improving student outcomes. Educational institutions use data science to analyze student performance, identify learning patterns, and develop tailored curricula that cater to individual needs. Predictive models help educators identify students at risk of falling behind and provide timely interventions to improve learning outcomes.

Data science also facilitates the development of intelligent tutoring systems that adapt to student's learning styles and provide real-time feedback. In addition, learning management systems (LMS) use data science to track student progress, recommend resources, and measure the effectiveness of teaching methods. This results in a more personalized and efficient learning experience for students.

Big data professionals manage and work with massive datasets that exceed the capabilities of traditional data-processing tools. Their primary task is to design, implement, and maintain systems capable of processing, storing, and analyzing large-scale data. Big data engineers are essential for setting up infrastructures that handle data effectively and efficiently. They ensure that data is organized and optimized for further analysis by data scientists and analysts.

Their work involves setting up big data frameworks, like Hadoop and Apache Spark, for managing the data lifecycle. Big data professionals often collaborate with data scientists to ensure that data is readily available for analysis. Their ability to manage large volumes of data, ensuring it's processed in real-time or batch modes, is crucial to ensuring seamless integration across organizational systems.

Big data refers to the massive volume of structured and unstructured data generated by individuals, businesses, and machines daily. The ability to analyze and extract insights from big data is a game-changer for businesses, offering a wealth of opportunities to improve decision-making and operational efficiency. By leveraging big data analytics, companies can gain insights into customer behaviors, optimize supply chains, predict market trends, and even create new revenue streams.

The widespread adoption of big data technologies has reshaped industries by enabling data-driven strategies and fostering innovation. In an increasingly interconnected world, the importance of big data continues to grow as organizations face more complex challenges and opportunities. By utilizing advanced analytics, businesses can unlock the full potential of the vast data they generate. Big data not only enhances decision-making processes but also drives cost savings, revenue growth, and improved customer experiences. As organizations strive to stay competitive, harnessing big data's potential has become essential for success in the digital age.

While big data offers powerful advantages, it also presents several challenges that can hinder its effectiveness. The vast volume, variety, and velocity of data require sophisticated tools and techniques to process and analyze. However, managing such large datasets can overwhelm organizations, especially without the right infrastructure.

Moreover, as businesses rely on big data for decision-making, they face risks regarding data security, quality, and the complexity of handling massive datasets. Big data can also lead to a reliance on data-driven decisions that only sometimes reflect the full context. Issues like data privacy, high operational costs, and potential biases in data analysis are concerns that businesses need to address. In this section, we will discuss the key disadvantages associated with big data.

Big data analytics requires specialized tools to handle and process large volumes of data efficiently. These tools help organizations manage, store, and analyze vast amounts of structured and unstructured data from various sources. Big data tools are designed to work with distributed computing environments, ensuring that data can be processed quickly and scaled effectively.

These tools are essential for extracting insights from large datasets and supporting data-driven decision-making. As big data continues to grow, organizations rely on an ecosystem of tools to enable them to perform complex analyses, visualize data, and manage massive amounts of information. The tools used in big data help reduce the challenges of data complexity and ensure that businesses can unlock the value hidden in vast datasets.

Big data specialists are professionals who manage and analyze vast amounts of data to extract valuable insights. This field demands a unique skill set due to the sheer volume and complexity of the data involved. Big data specialists work with distributed computing systems, advanced analytics, and complex algorithms to manage, process, and analyze data in real time.

They need a deep understanding of both the technical and business aspects of big data to succeed. Becoming a big data specialist requires expertise in a range of areas, including cloud computing, database management, data engineering, and machine learning. The skills required in this field are typically more advanced than those of a data analyst due to the complexity of big data systems. Below are the essential skills needed to become a big data specialist.

Hadoop and Spark are foundational technologies for big data processing, and proficiency in these platforms is essential for big data specialists. Hadoop’s distributed computing model allows specialists to process large datasets across a cluster of computers. This scalability is essential for handling big data efficiently. Spark, on the other hand, is designed for in-memory processing, enabling faster data analysis.

Big data specialists need to be comfortable using these technologies for batch processing (Hadoop) and real-time analytics (Spark). They should be proficient in setting up clusters, optimizing performance, and using the frameworks to develop big data pipelines. Knowledge of tools like HDFS (Hadoop Distributed File System) and Spark’s MLlib for machine learning applications enhances the skill set further, enabling specialists to work effectively in a big data environment.

Big data specialists must have an in-depth understanding of both SQL and NoSQL databases to work with different types of data. While SQL is used for querying structured data, NoSQL databases such as MongoDB, Cassandra, and HBase are essential for managing unstructured and semi-structured data. NoSQL databases are optimized for scalability and flexibility, making them ideal for big data applications.

Big data specialists need to be skilled in writing complex SQL queries for large datasets and familiar with the principles of NoSQL databases. They should also understand how to store and retrieve large volumes of data efficiently from these databases. This expertise allows them to work with diverse data formats and scale their systems to handle the ever-growing amount of data.

Cloud computing is an essential skill for big data specialists, as many big data platforms are deployed in the cloud. Cloud services like AWS, Google Cloud, and Microsoft Azure offer scalable infrastructure and tools for managing and analyzing large datasets. Big data specialists need to understand how to deploy and manage big data solutions in the cloud, utilizing services like AWS S3 for storage, Google BigQuery for analytics, and Azure Databricks for processing.

Cloud computing knowledge is also crucial for scaling big data solutions. With cloud platforms, specialists can easily scale their infrastructure up or down based on the data volume, ensuring optimal performance and cost-efficiency. Expertise in cloud services ensures that big data systems can handle high-volume workloads and provide real-time analytics, which is essential for modern business operations.

Big data specialists must understand data engineering, which involves designing and managing the architecture for data collection, storage, and processing. This includes knowledge of ETL (Extract, Transform, Load) processes, which are critical for moving data from various sources into data warehouses for analysis. A big data specialist should be skilled in using ETL tools and frameworks to build data pipelines that clean, transform, and load data efficiently.

Data engineering is foundational for big data analysis, as it ensures that the data is properly structured and accessible for analysis. Specialists need to be proficient in tools like Apache NiFi and Talend to automate the flow of data from different systems. Strong data engineering skills help ensure that big data projects are efficient, reliable, and scalable, contributing to the overall success of the analysis.

Machine learning and artificial intelligence are essential skills for big data specialists, as these technologies enable predictive analytics and data-driven decision-making. Machine learning algorithms can uncover hidden patterns in data and generate models that predict future trends. Big data specialists need to be proficient in using machine learning libraries like Scikit-learn, TensorFlow, and PyTorch to develop and train models on large datasets.

In addition to basic machine learning algorithms, specialists need to understand advanced techniques such as deep learning and natural language processing (NLP). These technologies are increasingly used to analyze unstructured data, like text, images, and video. Big data specialists with expertise in AI can apply these advanced techniques to provide more comprehensive insights and automate decision-making processes across industries.

In the world of big data, systems must be able to scale to accommodate the ever-growing volume of data. Big data specialists need to design solutions that can handle both increasing data sizes and higher user demand without compromising performance. This requires a deep understanding of distributed computing systems, load balancing, and parallel processing. Scalability ensures that as the business grows, the data infrastructure can grow with it.

Performance optimization is just as crucial, as inefficient systems can slow down data processing and analysis. Big data specialists need to identify bottlenecks and optimize processes to reduce latency and improve system throughput. This may involve tuning the performance of the underlying hardware or optimizing code and algorithms. By focusing on scalability and performance optimization, big data specialists ensure that the systems they build can handle large datasets efficiently and continue to provide valuable insights over time.

Data governance and security are critical components in managing big data. Big data specialists must understand how to ensure that data is accurate, consistent, and protected from unauthorized access or breaches. Data governance frameworks ensure that data management practices align with legal and regulatory requirements, ensuring compliance with data protection laws like GDPR or CCPA. Big data specialists need to design and implement policies for data stewardship, data quality, and access control.

Security is particularly important when dealing with big data, as large datasets can be lucrative targets for cyber-attacks. Big data specialists must ensure that sensitive data is encrypted and that proper authentication protocols are in place. They also need to be familiar with security technologies such as firewalls, intrusion detection systems (IDS), and data masking techniques to prevent data breaches. Effective data governance and security measures help protect both the data and the organization’s reputation.

Big data specialists must be proficient in advanced analytical techniques to extract actionable insights from large and complex datasets. These techniques include predictive analytics, statistical modeling, time-series analysis, and anomaly detection. Understanding how to apply these methods to big data sets allows specialists to identify trends, forecast future outcomes, and detect outliers or unusual patterns that might indicate business opportunities or risks.

To apply advanced analytical techniques, big data specialists use programming languages like Python, R, and Scala, as well as specialized tools like Apache Mahout, Spark MLlib, and SAS. These techniques are essential for making data-driven decisions and creating algorithms that can automate processes. The ability to perform advanced analysis ensures that specialists can deliver in-depth insights that drive business strategies and improve operational efficiency.

Real-time data processing is an essential skill for big data specialists working with time-sensitive data, such as information from IoT devices, social media feeds, or financial markets. Real-time data analysis allows businesses to make immediate decisions based on current data rather than relying on batch processing that may introduce delays. Big data specialists use tools like Apache Kafka, Apache Flink, and Spark Streaming to build systems that process and analyze data in real time.

Big data specialists need to ensure that the systems they design are capable of handling high-velocity data streams without losing data integrity or performance. Real-time data processing is crucial for industries such as finance, healthcare, and e-commerce, where quick decisions are essential. Specialists must design systems that can scale efficiently, manage data inflows in real time, and provide actionable insights instantly.

Java and Scala are two important programming languages commonly used in big data applications. Scala is often used in conjunction with Apache Spark due to its compatibility and ability to perform functional programming efficiently, making it ideal for handling large datasets in a distributed system. Java, on the other hand, remains a widely used language in big data platforms like Hadoop for building scalable applications and handling data processing tasks.

Big data specialists need to be proficient in both Java and Scala, as these languages enable them to write high-performance code for big data processing. Scala’s concise syntax and functional programming features make it ideal for building fast and reliable data pipelines, while Java offers robust libraries and tools for working with big data frameworks. Mastery of these programming languages allows specialists to develop complex big-data solutions and optimize performance across platforms.

Big data refers to the enormous volumes of structured and unstructured data that are generated every day. Organizations and industries are increasingly leveraging big data technologies to unlock valuable insights that drive operational efficiency, innovation, and strategic decision-making.

By processing and analyzing large datasets, companies can identify patterns, trends, and correlations that would otherwise go unnoticed, providing a competitive advantage. From healthcare to finance and beyond, big data has a transformative impact across multiple sectors. The following are key applications where big data is making a significant difference.

Retailers leverage big data to predict customer behavior, optimize pricing strategies, and improve inventory management. By analyzing consumer shopping habits, browsing behavior, and social media interactions, retailers can forecast demand trends and recommend personalized offers. This allows businesses to target marketing campaigns more effectively, ensuring that the right products are promoted to the right customers at the right time. It also improves product placement strategies by identifying which items are in high demand, ensuring optimal stock levels, and reducing overstock.

Furthermore, predictive analytics can enhance customer satisfaction by providing tailored shopping experiences based on individual preferences. This can lead to more efficient product recommendations, time-sensitive discounts, and a more personalized shopping journey. By continuously gathering data from customer interactions, retailers can adjust their strategies to align with shifting consumer behaviors, ultimately improving customer loyalty and driving revenue growth. This data-driven approach helps to stay competitive in a fast-evolving market.

Big data plays a critical role in addressing climate change by providing accurate models of environmental systems. Satellite imagery, weather patterns, and ocean temperatures are just a few data sources used to track and predict climate trends. By analyzing massive datasets, scientists can forecast changes in global temperatures, sea levels, and weather events. These insights allow governments, organizations, and researchers to make better-informed decisions regarding climate policies, resource allocation, and environmental protection strategies.

In addition to forecasting long-term environmental changes, big data also helps in monitoring real-time conditions, such as air quality and water levels. With the ability to process vast amounts of data in real-time, it enables faster detection of environmental issues, such as pollution or droughts. It supports efforts to prevent or mitigate their impact. This proactive approach helps protect natural ecosystems and public health while promoting sustainable development practices across industries.

Big data is transforming the financial sector by enabling advanced fraud detection and risk management strategies. By analyzing transactional data across various platforms, financial institutions can identify irregular patterns or anomalies that may signal fraudulent activities. These systems use machine learning algorithms to monitor transactions in real time, ensuring quick detection and intervention, thus reducing the risk of financial fraud. This is especially important in identifying credit card fraud, identity theft, or money laundering, where rapid response is critical to minimizing damage.

Moreover, big data technologies help financial institutions assess overall financial health and predict potential risks. By analyzing market trends, historical data, and customer behavior, banks can make more informed decisions about loans, investments, and portfolio management. This leads to better risk mitigation strategies and more secure financial systems, benefiting both customers and institutions. The power of big data is helping shape a more secure financial environment, reducing risks and enhancing trust in the financial system.

Big data is revolutionizing how energy providers manage resources and optimize energy distribution. By collecting data from smart meters and sensors, energy companies can monitor usage patterns across different regions and make real-time adjustments to optimize the energy grid. This helps prevent waste, lowers operational costs, and ensures that energy is distributed efficiently. Predictive analytics also allows utilities to forecast energy demand, allowing for better planning and reducing the likelihood of outages or overproduction.

Furthermore, big data is playing a key role in integrating renewable energy sources like solar and wind power into the grid. By analyzing weather data, energy production rates, and consumption patterns, utilities can predict energy generation from renewable sources and align supply with demand. This ensures a stable and sustainable energy supply, reduces dependency on non-renewable resources, and helps in reducing carbon emissions, contributing to more environmentally friendly energy systems.

Big data is at the heart of the development of autonomous vehicles, enabling them to make real-time decisions based on a vast array of data inputs. These vehicles use sensors, GPS data, and cameras to detect road conditions, traffic, and obstacles, allowing for safer and more efficient navigation. By processing and analyzing these large datasets, autonomous vehicles can optimize their routes, reduce fuel consumption, and enhance passenger safety. This data-driven approach is making transportation safer, more efficient, and less dependent on human intervention.

In addition to improving individual vehicle performance, big data is being used to optimize traffic management systems. Cities collect data from traffic cameras, sensors, and GPS systems to analyze traffic patterns and adjust traffic signals accordingly. This helps reduce congestion, improve traffic flow, and decrease travel time for commuters. By integrating data from various sources, cities can develop smarter traffic management systems that promote sustainability, reduce emissions, and improve overall urban mobility.

Big data is revolutionizing supply chain management by providing insights that allow businesses to optimize operations. By analyzing historical data and real-time information from suppliers, manufacturers, and distributors, companies can identify inefficiencies and bottlenecks in their processes. This enables better demand forecasting, improved inventory management, and the ability to predict fluctuations in supply and demand. With this information, businesses can optimize their supply chains, ensuring that products are delivered on time and in the correct quantities, thus reducing costs and improving customer satisfaction.

Additionally, big data enables businesses to enhance the logistics and transportation aspects of their supply chain. By leveraging real-time data from GPS systems and tracking software, companies can optimize delivery routes, reduce fuel consumption, and minimize delays. This data-driven approach to supply chain management leads to greater efficiency, lower operational costs, and increased agility in responding to changing market demands. It also ensures a more sustainable and resilient supply chain in the face of global disruptions.

Big data is an essential tool for predicting trends and evaluating risks in the financial markets. By analyzing vast amounts of historical financial data, such as stock prices, economic indicators, and market reports, analysts can uncover patterns and trends that can inform investment decisions. Machine learning algorithms are used to analyze data in real-time, providing investors with valuable insights into market movements and helping them make more informed decisions. This predictive capability allows investors to navigate the volatility of financial markets better.

Additionally, big data helps in risk management by assessing the potential risks associated with different investment strategies. By analyzing the performance of various assets and financial instruments, big data tools can help predict potential market downturns or identify emerging opportunities. This enables traders and investors to optimize their portfolios, minimize exposure to risk, and enhance their chances of achieving higher returns. As a result, big data plays a crucial role in modern financial decision-making.

Big data is transforming agriculture by enabling farmers to increase productivity and reduce resource waste. By utilizing data from soil sensors, weather stations, and satellite imagery, farmers can monitor soil conditions, track crop growth, and optimize irrigation practices. This data helps farmers make more informed decisions about when to plant, water, and harvest, improving yields and reducing the environmental impact of farming. Big data also enables farmers to forecast weather conditions and plan for potential crop diseases or pest outbreaks, ensuring better crop health and fewer losses.

Moreover, precision agriculture leverages data to improve sustainability by reducing the need for pesticides and fertilizers. Through data-driven insights, farmers can apply the right amount of resources where and when they are needed, reducing costs and minimizing environmental harm. This not only boosts the profitability of farming but also promotes responsible and sustainable agricultural practices, helping to meet the growing demand for food while conserving natural resources.

Big data is playing a pivotal role in public health by enabling the early detection and monitoring of disease outbreaks. By collecting data from hospitals, clinics, and public health organizations, authorities can quickly identify patterns of illness and track the spread of infectious diseases. This data is used to develop real-time disease surveillance systems, enabling health agencies to respond more effectively to health crises, such as pandemics or localized outbreaks. This proactive approach helps mitigate the spread of diseases and allocate resources more efficiently.

In addition to tracking infectious diseases, big data also supports personalized healthcare. By analyzing patient histories, genetic data, and lifestyle factors, big data can help in the development of tailored treatments and preventative care strategies. This results in better health outcomes by providing patients with treatments that are more suited to their individual needs. Big data also enhances the effectiveness of vaccination campaigns, disease prevention strategies, and health education programs by providing valuable insights into population health trends.

Big data is a key factor behind the success of content recommendation systems used by companies like Netflix, Amazon, and Spotify. By analyzing user behavior, preferences, and past interactions, big data algorithms can suggest products, movies, or songs that align with a user’s interests. This not only increases customer engagement but also boosts sales and brand loyalty by offering a personalized experience. The more data that is gathered, the more accurate these recommendations become, further enhancing the user experience.

Moreover, big data enables companies to track and analyze user interactions across different platforms, refining recommendation algorithms over time. By examining user feedback and engagement data, companies can continuously improve their recommendation systems to ensure that they are providing relevant and timely content. This ongoing optimization ensures that users receive personalized content that keeps them engaged, ultimately leading to better customer satisfaction and retention.

The rapid adoption of emerging technologies such as big data, artificial intelligence, machine learning, and the Internet of Things (IoT) is profoundly transforming global economies. These technologies are driving innovation, improving efficiency, and reshaping industries by creating new opportunities for growth. By automating processes, enhancing decision-making, and enabling better resource management, they are helping businesses reduce costs and optimize performance.

However, the increasing reliance on technology is also creating new challenges, such as the displacement of jobs, data privacy concerns, and the need for new skills in the workforce. As a result, governments, businesses, and workers must adapt to this technological revolution to ensure long-term economic stability and prosperity.

The impact of these technologies is felt across various sectors, from manufacturing to healthcare, and continues to grow, pushing economies to evolve in response to these technological advances.

As technology continues to advance, salary trends in the tech industry have seen significant growth, driven by the increasing demand for specialized skills in fields like artificial intelligence, data science, cybersecurity, and software development. Professionals with expertise in emerging technologies are commanding higher salaries due to the need for their advanced skill sets in a competitive job market.

Roles such as data scientists, cloud engineers, and AI specialists are seeing particularly strong demand, with salaries consistently rising year after year. Companies are willing to offer higher compensation packages to attract top talent, often supplementing base salaries with performance bonuses, stock options, and other incentives to retain skilled workers in a rapidly evolving environment. Salary data across various technology roles indicates a positive upward trajectory, with high-paying opportunities available in both large tech firms and startups.

According to recent data, the average salary for a software developer is approximately $105,000 annually, while data scientists earn an average of $115,000. Cybersecurity professionals earn an average of $110,000, with some specialized roles commanding significantly more. The trend suggests that salaries for technical roles will continue to rise, especially for those with expertise in AI, machine learning, and cloud technologies. These salary increases reflect the critical importance of these technologies in driving innovation and economic growth.

Data scientists and big data professionals play a pivotal role in analyzing and interpreting large volumes of data to extract valuable insights that drive business decisions. Data scientists typically work with a variety of data types, including structured, semi-structured, and unstructured data, utilizing statistical methods, machine learning algorithms, and data visualization techniques to uncover patterns and trends.

They collaborate with cross-functional teams to identify business problems and apply data-driven solutions, often leveraging programming languages such as Python, R, and SQL. In addition to building predictive models, data scientists are responsible for data preprocessing, feature engineering, and conducting experiments to validate hypotheses, ensuring that data-driven insights are accurate and actionable. Big data professionals, on the other hand, focus on managing and processing massive datasets that are too large or complex for traditional data-processing methods.

These professionals design and maintain data architectures, frameworks, and tools to store and process big data efficiently, often using technologies like Hadoop, Spark, and cloud platforms. They work closely with data engineers and analysts to ensure the seamless integration and scalability of big data solutions, enabling organizations to make real-time decisions based on data insights. Both data scientists and big data professionals contribute to enhancing data accessibility, scalability, and analytics, which are essential in today’s data-driven business landscape.

Data Science and Big Data are integral components of modern industries, with each playing a unique yet complementary role in the data ecosystem. Data Science focuses on the analysis and interpretation of data to extract valuable insights and support decision-making. Big Data, on the other hand, is concerned with the tools and technologies required to manage and process massive datasets.

Together, these fields empower organizations to unlock the full potential of their data, driving innovation, operational efficiency, and strategic growth across various sectors. Understanding their differences and synergies is crucial for businesses aiming to remain competitive in the digital age.

Copy and paste below code to page Head section

Data Science involves extracting insights from data using statistical and computational methods. In contrast, Big Data refers to large and complex datasets that require specialized tools and technologies for storage, management, and processing. Data Science analyzes these datasets to uncover patterns, trends, and predictive insights.

Big Data helps businesses optimize decision-making by analyzing large volumes of structured and unstructured data. It enables organizations to identify customer preferences, predict market trends, improve operations, and enhance customer experiences. The insights gained from Big Data help businesses become more agile, competitive, and efficient.

Key skills for Data Science include proficiency in programming languages like Python and R, a solid understanding of statistics and machine learning, and experience with data visualization tools. Knowledge of databases, data wrangling, and cloud computing is also essential. Effective communication skills are crucial for presenting insights clearly to stakeholders.

Common Big Data tools include Apache Hadoop for distributed storage and processing, Apache Spark for fast data processing, and Apache Kafka for real-time data streaming. Other tools include NoSQL databases like MongoDB, data integration tools like Talend, and cloud platforms like AWS and Google Cloud for scalable data storage and processing.

Yes, Data Scientists frequently work with Big Data. They use Big Data tools and technologies to process and analyze large datasets. While Data Science focuses on extracting insights and creating models, Big Data provides the infrastructure needed to store, manage, and scale these large volumes of data efficiently.

Challenges in working with Big Data include data security and privacy concerns, the need for high computing power, and the complexity of managing diverse data types. Ensuring data quality, dealing with unstructured data, and the need for more skilled professionals in Big Data are also significant obstacles businesses face.